Prohibited Artificial Intelligence Practices, Classification (Art. 6) and Requirements (Ch. 2)

The EU legal framework was built around the notion that AI should be Ethical and Trustworthy. It is not therefore a surprise that AI practices that contradict basic human rights are considered prohibited. AIA (Council, Nov 2022)(Article 5) prohibits AI practices that might lead to physical or phycological harm (Fig. 1).

Classification (Art. 6)

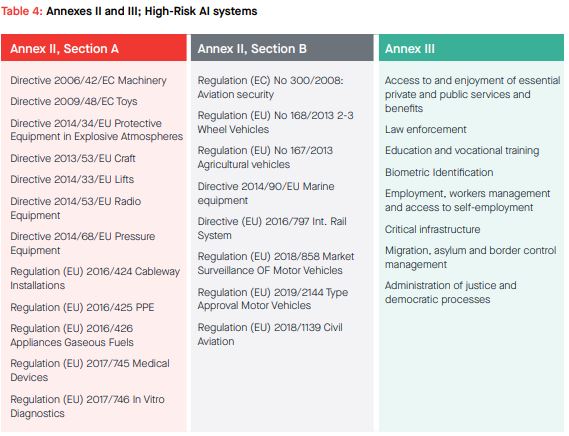

AIA (Council, Nov 2022) classifies AI system in two broad categories, High and Low Risk, described in annexes. When AI is a safety component or a product on its own and is covered under legislation set out in Annex II (Table 4) or described in Annex III (Table 4), then it is considered High-Risk AI. The exception is that, for AI described in Annex III, it will fall under high-risk category, “unless the output of the system is purely accessory”.

As a future failsafe mechanism, Article 7 empowers the Commission to update the Annex III list when two conditions are met: (a) the AI is intended to be used in areas covered by Annex III points 1-8; and (b) the AI poses a risk of harm to health, safety, or a risk to fundamental rights.

Requirements (Ch. 2)

Requirements, conditions that a high-risk AI system should comply with, are set in Articles 9 to 15 (Council, Nov 2022). The description of requirements is at a high level and presumption of conformity with requirements is assumed when AI systems conform to Harmomized Standards (Art.40).

In the Council proposal (Council, Nov 2022) it is clarified that, when AI falls under a sectorial Union law (e.g., Medical Device Regulation - MDR), the requirements of the AIA should be assessed under the conformity assessment process of the sectorial Union law. It is further clarified when high-risk AI systems are subject to obligations/requirements under relevant sectorial Union law, the AIA aspects may be part of the procedures/systems established pursuant to sectorial law. This is clarified for both Risk Management requirements (Art. 9 Sec 9) and Quality Management System obligations (Art. 17, Sec 2a).

This blog post is an excerpt from our updated whitepaper: Ethical and trustworthy Artificial Intelligence. Please download the full whitepaper to find out more information.

The Compliance Navigator blog is issued for information only. It does not constitute an official or agreed position of BSI Standards Ltd or of the BSI Notified Body. The views expressed are entirely those of the authors.